October 2025

Voice AI RAG Pipeline

Dev Patel

Adrian is a voice-powered F1 race engineer that combines RAG (Retrieval-Augmented Generation) over FIA sporting regulations with real-time championship calculations. The goal was an AI assistant that can answer questions like "If Max has 400 points and Lando has 350 with 3 races left, can Lando still win?" and instantly reference specific FIA regulations.

The project uses LiveKit for real-time voice, LangChain for the RAG pipeline, and OpenAI GPT-4 for natural language understanding. What makes Adrian unique is combining structured data (championship math) with unstructured data (FIA regulation PDFs) in a single conversational interface. GitHub – Adrian

Tech Stack

The architecture has three main parts:

- LiveKit — Real-time voice with sub-second latency. WebRTC-based bidirectional audio between user and the AI agent.

- LangChain + ChromaDB — RAG pipeline: FIA regulations PDF is chunked (1000 characters, 200 overlap), embedded with OpenAI embeddings, and stored in ChromaDB for semantic search.

- OpenAI GPT-4 — NLU and generation, with function-calling tools for championship calculations and dynamic RAG context.

The frontend is Next.js 15 with LiveKit React components; the backend Python agent runs on LiveKit's agent framework and orchestrates voice, RAG, and tools.

RAG Pipeline

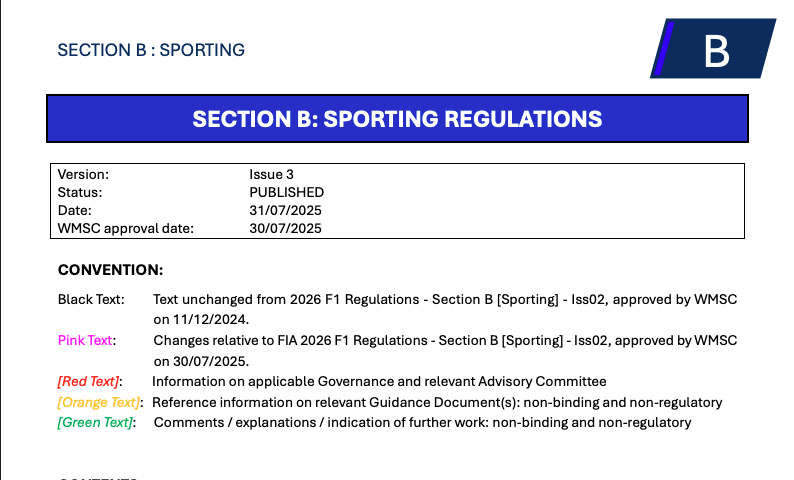

The RAG pipeline is what lets Adrian answer regulation questions. It starts with the FIA Formula 1 Sporting Regulations PDF (200+ pages).

The PDF is loaded with PyPDFLoader and split with RecursiveCharacterTextSplitter: 1000 characters per chunk, 200-character overlap so context carries across boundaries. Chunks are embedded with OpenAI text-embedding-ada-002 (1536-dimensional vectors) so we can match by meaning even when the user’s wording differs.

Embeddings are stored in ChromaDB. When the user asks something:

- Detect if the question needs regulation lookup (e.g. "rule", "regulation", "points system").

- Embed the question with the same model.

- Similarity search in ChromaDB for the top 4 most relevant chunks.

- Inject those chunks into the LLM context with source page numbers.

That gives Adrian access to the full FIA rulebook without loading it all into the context window. Retrieval runs in real time during the conversation.

Voice Agent

The voice agent is built on LiveKit's agent framework, which handles WebRTC and real-time audio.

- VAD (Voice Activity Detection) — Silero VAD for when the user starts and stops speaking, so turn-taking feels natural.

- STT (Speech-to-Text) — OpenAI Whisper, real-time, sub-second latency.

- LLM — Transcribed text goes to GPT-4 with function-calling tools for championship math and RAG context for regulations.

- TTS (Text-to-Speech) — OpenAI TTS with the "echo" voice for a calm, professional race-engineer tone.

Adrian’s personality is defined in the system prompt: veteran F1 race engineer — calm, technical but clear. He uses phrases like "Let me run those numbers..." before calculations, cites FIA articles when discussing rules, keeps replies to about 30–60 seconds of speech, and reacts to strategies with "Brilliant" or "Questionable call".

The agent has three tools: calculate_championship_scenario, calculate_points_swing, and calculate_pit_stop_time_loss. They provide exact numbers that sit alongside the RAG-retrieved regulation context.

Demo & Results

Adrian ties structured and unstructured data into one conversational flow. Users can ask "If Max has 400 points and Lando has 350 with 3 races left, can Lando win?" and get immediate math, or "What's the F1 points system?" and get the exact FIA rule with article number.

The voice UI keeps sub-second latency so the conversation doesn’t feel laggy. RAG for regulations plus function-calling for calculations makes the assistant both knowledgeable and precise.

You can try it by cloning the repository and running the backend.

Key Learnings

Building Adrian reinforced how well RAG and structured tools work together: RAG for long-form documents, tools for exact calculations. In voice, latency and personality matter much more than in text chat.

LiveKit’s agent framework made the voice side straightforward. The trickiest part was tuning the RAG pipeline — chunk size, overlap, and retrieval parameters — to get a good tradeoff between relevance and context length.